Learning in the open, GPT5, AI news

I'm starting something new, join me 😊

In September I’ll be going freelance, working in the AI space. Although I’ve been kind of been working in the general area of traditional ML and data science for some years, there’s a lot to catchup on, so I’m taking some time to upskill, read and think. I’m pretty excited about this, learning is something I enjoy, though I never quite get the time to do enough of it. Which is why I’m starting this substack - more of a scratchpad than a traditional newsletter, I’m going to try to capture interesting things that I learn as I learn them. It’s also a way to log things and stay accountable. I expect it will be raw and unpolished, which in a world of increasingly polished and beige AI prose I think is a good thing - bad writing is the new good, sort of. My aim is to write authentically and honestly, primarily for myself, but I hope the struggle and journey resonates with others!

GPT-5 release

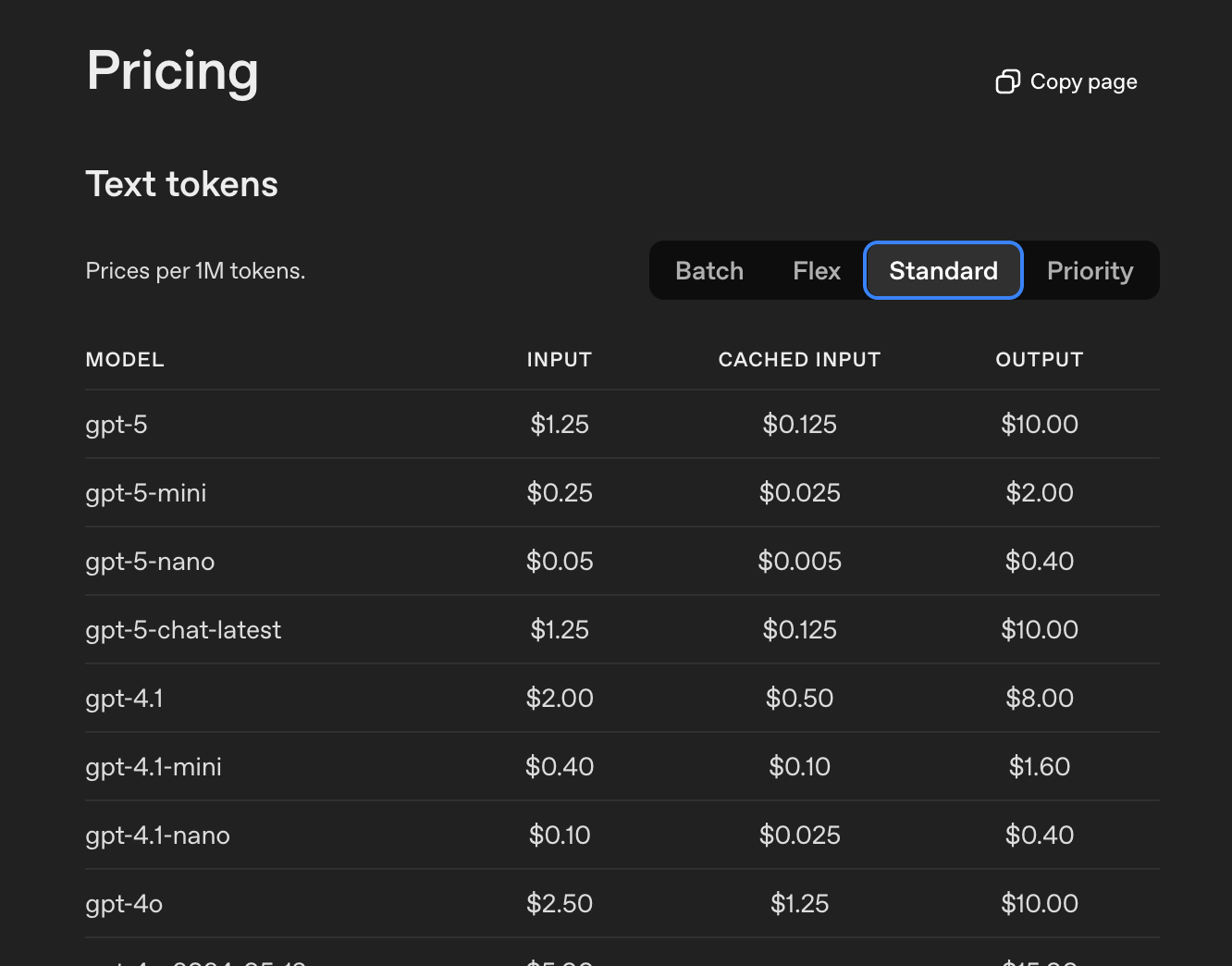

gpt 5 was released on Thursday 7th August - OpenAI’s official announcement posts don’t say too much. Simon Willison’s write-up “GPT-5: Key characteristics, pricing and model card” is a great place to start. The biggest changes seem to be simplified range of models (naming was out of hand before with o3, o3-mini, 4o, 4.1-nano etc.), and very keen pricing on input tokens. GPT 5 is half the price gpt-4o, and gpt-5-nano is 5c / M tokens (!). Not new to this release, but often overlooked batch pricing for asnychronous non-time sensitive tasks is even cheaper.

There’s sort of a catch with the pricing - by default these are all reasoning models, and the number of output reasoning tokens can be sizable. I flipped my gpt-4o-mini summarisation processes over to gpt-5-nano, and noticed a huge uptick in output tokens, which more than wiped out the per token savings and resulted in a doubling of costs! Updating the OpenAI python package and adding reasoning={"effort": "minimal"} seemed to make things more comparable to the non-reasoning equivalents.

Although the changes are great, and lower costs are awesome, it’s not the step up in capability or performance I’d expected with a major version bump. The Register commented that OpenAI's GPT-5 looks less like AI evolution and more like cost cutting - I’m not sure I’d go this far, but I wonder if it does point to a potential slowing in what can achieved under the current training paradigm.

Other AI news

Claude 4 1M token context in beta - for tier 4 users initially and more costly per token, and seems aimed at packing more context into code agents for larger repos, I hope we see this rolling out in Claude Code / your favourite agent soon. As a side note, it got me wondering about the large context needle-in-a-haystack problem, which may now be essentially solved, but doesn’t perhaps get discussed as much as it should.

Thomas Dohmke resigned as Github CEO 'to become a founder again'. I thought this was interesting, Dohmke always has insightful things to say about the future of software development, even although github copilot has been lagging a bit lately on code agents. It’ll be interesting to see both how this pans out for github as it moves more closely to it’s parent Microsoft and to whatever Dohmke decides to build next.

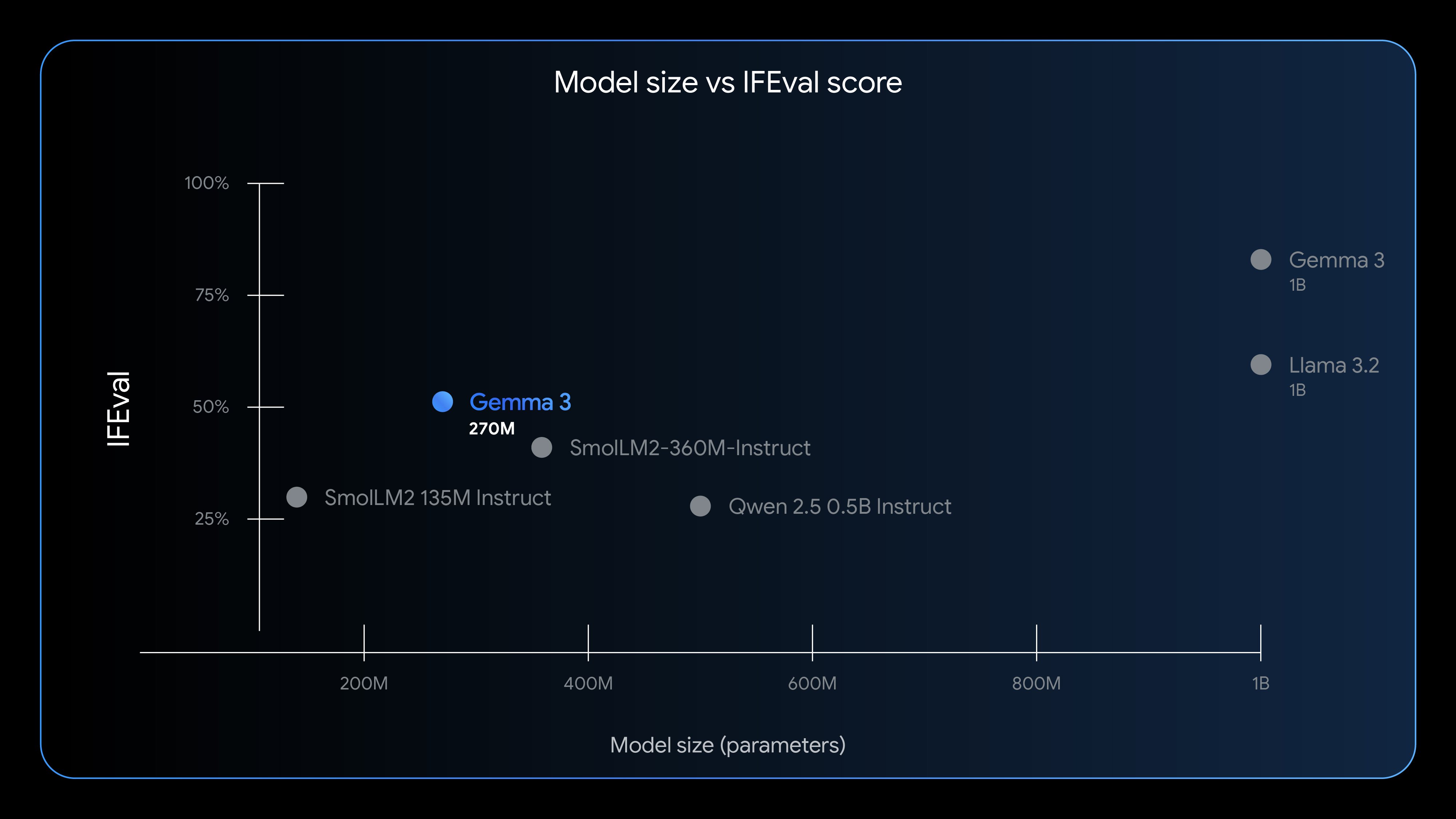

DeepMind releases Gemma 3 270M, a very small and very capable open weight model. I’ve been meaning to do something interesting with a small model for some time, this one might have come at the right time.

Other things I read this week

Why LLMs can't build software by Conrad Irwin. I loved the succinctness of framing of building software as being difference of two mental models - one of the mental model of how the software currently works and what it does, and the mental model of what it should do. For LLMs this is essentially a problem of context gathering and management, which is currently limited by context windows (see above about Claude’s recent change) and product design. Something that LLM products don’t do but should, is ask more questions after a user prompt to clarify it’s understanding of what you ask. Something I added to my Claude preferences some time ago was a request that it should ask for clarification when required.

What's the strongest AI model you can train on a laptop in five minutes? terrific article, provides some interesting intuitions about the relationship between corpus complexity, model architecture and the number of tokens you can train per second. Probably the best thing I read this week. 👌

A fun github issue about Claude’s ‘you’re absolutely right!’ sycophancy.